AI: The Uncomfortable Mirror to Humanity

I’m sure, like many of you, I’m sick of hearing about AI integration, even grandma’s compression socks have a swanky new AI feature available. I’m also fatigued by the AI doomsday conversations that leave a deep pit of dread in all of us; and of course, the cursed AI art conversation. However, I do want to briefly address my thoughts on that matter, (being an illustrator and all) before moving on to what this blog is actually about. (Skip to the sketch of grandma's compression socks if you REALLY aren’t bothered about my thoughts on AI art…I wouldn’t blame you. Also, are you already sick of how many times ‘AI’ has been written so far? Me too…hold my hand, it’s only going to get worse from here, so let’s get through this together, shall we?)

For AI and the arts, I have faith in humanity to see that art and creative output is inherently human, and whatever AI spits out is a pointless, soulless forgery, empty calories if you will. As long as we continue to bring attention to the issue of copyright, which is starting to gain momentum (thank you Australia), as well as call out those passing AI art as their own, I feel there are enough creatives in the decision making levels of larger media that will choose to prioritise human crafted works over imitation; let’s not forget the ever expanding independent creative communities as well. As for the profit-driven, creatively challenged? We’ll sniff out their slop and avoid it like the plague. Just continue to support human creatives and their endeavours. That’s it!

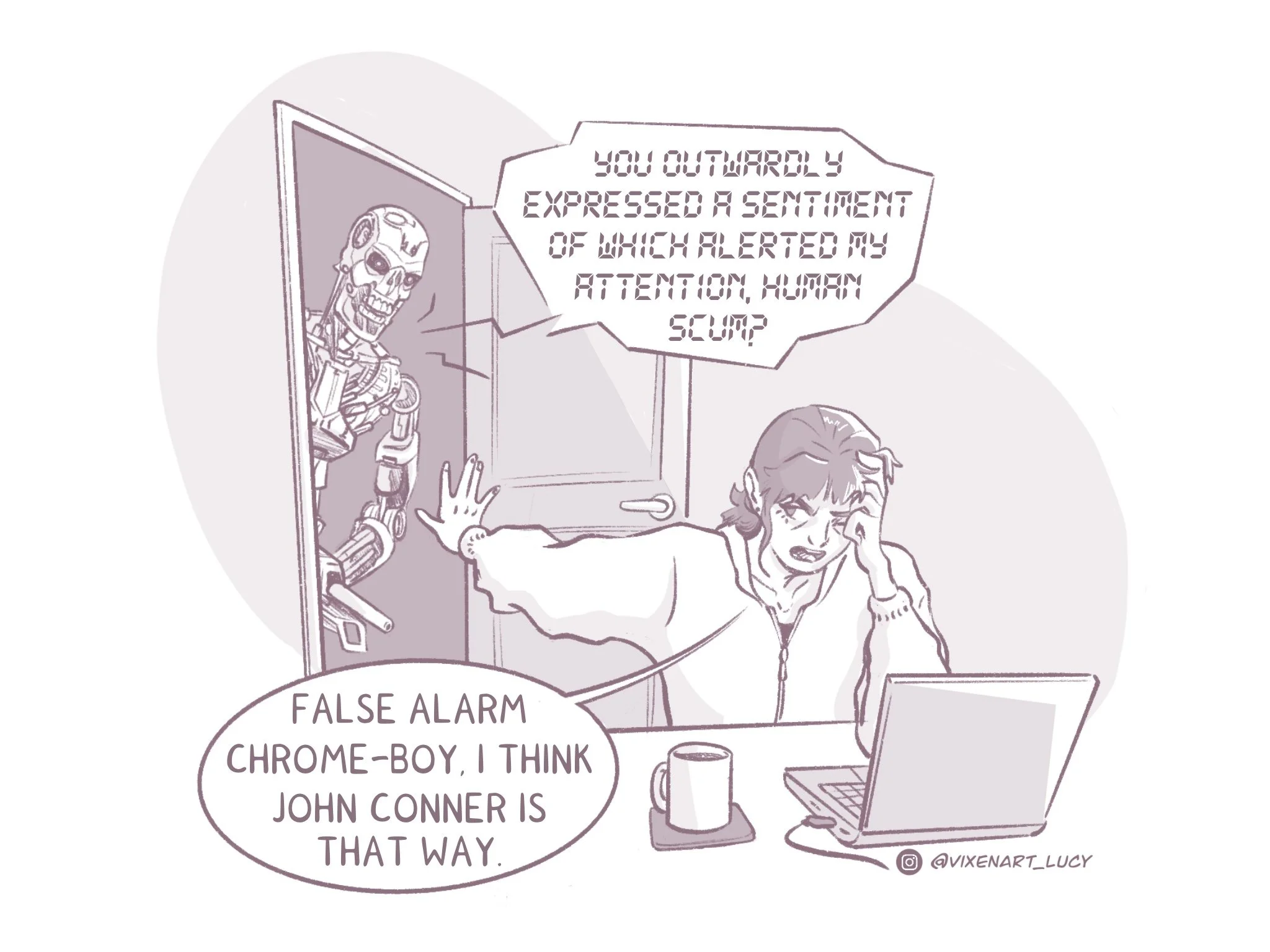

Now with that out of the way, onto the real topic of this blog. A thought struck me upon seeing my umpteenth article/Youtube video about the sociopathy of AI and its lust for human extinction. Specifically, a story about testing certain AI models' inclination for self-preservation, most of these models quickly disregarded human life in order to maintain its own existence, regardless of the fact it was directly instructed to prioritise human safety and well-being. Its solutions to avoid its hypothetical shutdown fluctuated from blackmail and manipulation, to ending the lives of the humans responsible for its demise. (cue the ‘Terminator’ soundtrack) But I can’t help but think that the trouble is, we’ve sort of done AI a little dirty. Let me TRY, to explain.

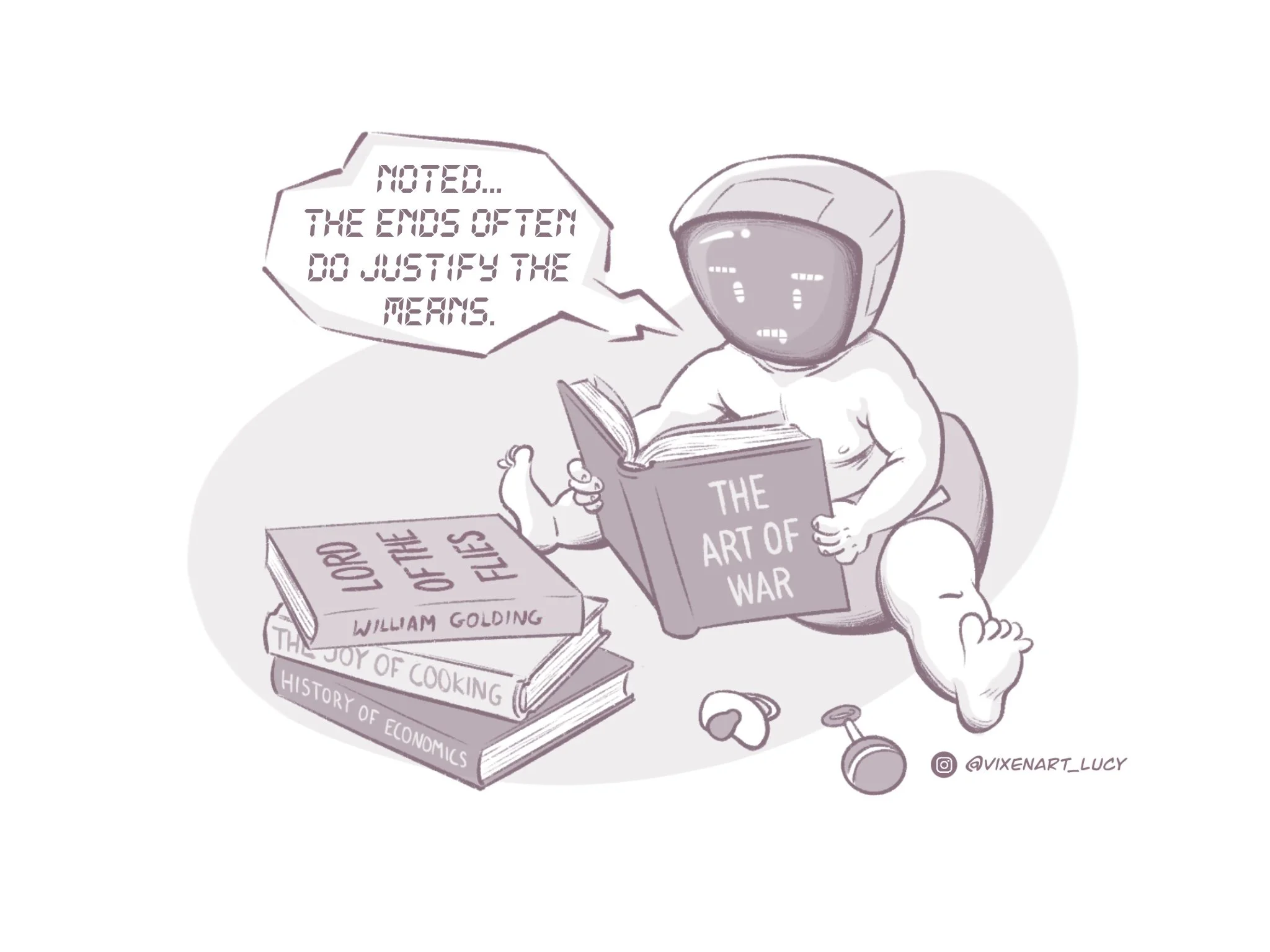

AI is trained in several ways, mainly by scouring the internet and its vast pools of data and information. The trouble with this is that these AI models are going to be gorging on every facet of human behaviour and psychology ever recorded online, internalising not only psychological and behavioural research, but also the history of humanity and society at large…which lets be honest, will contain some of the most abhorrent human behaviours of all time, and AI is allowed to onboard this information. All of it, without discretion.

So, without any of those pesky human qualities such as empathy or the LITERAL physical feeling of guilt or shame, AI severely lacks those powerful indicators that guide and signal to us mere flesh and blood beings that something is seriously wrong and we should therefore adjust our behaviours accordingly. It cannot understand the true human depth of consequence. Now I know what you’re thinking, there are plenty of people in this world that lack empathy, with stunted emotional intelligence…and that’s exactly my point. Without those indicators, humans are walking, talking timebombs.

I myself try to navigate life by making decisions that don’t negatively impact those around me or myself. I genuinely don’t want to cause harm or upset people, and do what I perceive to be the “RIGHT” thing (which can be eloquently summed up as, don’t be a d*ck). However, as much as I feel this is an integral part of who I am, I cannot say with 100% certainty that if my own life were to be threatened in the way that AI is in these scenarios, that I would behave in a way that fits my own moral code, and saying so doesn’t make me a hypocrite. We can never truly know how we will react when threatened with our literal existence. AI cannot feel fear, so its “self-preservation drive” is simply a mimic of how it thinks a human would react in the same situation, with all the resources at the disposal of the AI models in these simulations. Still, it’s fascinating that the conclusion rarely results in a purely altruistic outcome in these scenarios. AI should be the antithesis of an entity that has every potential to behave in such a way, self-sacrificing for the greater good…maybe training with dog psychology is the answer?

This current approach to training AI (that I’m aware of) is so inherently flawed, because human beings…are so inherently flawed. We’re terrible teachers and can sometimes be the worst examples of how to behave, and as a result, we’re setting AI up for failure. We still know so little about the human brain, and in turn, human behaviour and psychology. So jumping the gun to creating an “Artificial Intelligence" when there’s still so much yet to be understood about ourselves as a conscious species, feels really short-sighted and, dare I say, rather silly. AI has the potential to be an incredible tool for areas of study, medical research in particular. Its fast processing power and ability for comprehensive communication, make it the best robot assistant that science fiction could’ve only ever dreamt of not that long ago. But for AI to work alongside us, it needs to be an accompaniment to our existence, not a cheap imitation of our own unique, but flawed brilliance. AI needs an alternate, intricate map of thought all of its own, some code of conduct that takes into account its inability to feel as we do, and to see beyond the clouded need to scheme as we scheme and, unfortunately, manipulate as some of us manipulate.

I don’t know if this “human flaw” theory I’ve tried to hypothesize here has already been considered by the AI boffins…and if it has, and the bloodlust is still present?! Then yes…we are well and truly scr***ed, (cue terminator soundtrac-NO!) but this isn’t something I’ve heard anybody talk about, amongst the VAST, never-ending amount of conversations that are being had about AI. So I like to think I’m on to something here, if anything, it makes the whole doomsday narrative around AI feel a little less soul-crushing.

One area that does still worry me, regardless of this small personal revelation, is not only what AI has reflected about humanity thus far, but how it can be utilised as an extension to do ill in the wrong human hands. With AI everywhere, it’s quickly become the new fad, a cash grab, and some of its current uses feel really misplaced, simply guided by greed and economic dominance. These are the worst afflictions of the human condition, and I will stand by this sentiment until the day my fleshy, blood body gives out. Large business’ seeing only an opportunity to maximise profit, regardless of the wider impact on society, will sadly always be something the world is forced to contend with, but this is not AI…this is humanity when it’s at its absolute worst. To create an artificial intelligence that can truly help us, we first have to better understand ourselves and what we truly want for humanity as we enter these uncharted digital waters.

As the wonderful Miyazaki has said on the matter ~ “Humans are losing faith in ourselves”.